Machinic Apprenticeship

by sara dean and etienne turpin

HUMAN COMPUTATION IN TRANSITION

It is impossible to envisage the survival of the human species without considering increasing integration between human work and machinic work, to the point where assemblages of individuals and machines would supply goods, services and new needs, etc., on a massive scale. We are on a dizzying flight forward: we can no longer turn back, return to a state of nature, return to good intentions or small-scale artisanal productions.

Félix Guattari, ‘I am an Idea-Thief’

In the essay ‘Postmodern Deadlock and PostMedia Transition’, Félix Guattari observes a movement away from the territorial conservatism of architecture and towards other more dynamic and collective modes of ‘semiotization’.(1) For Guattari, “architecture has always occupied a major place in the fabrication of territories of power, in the setting of its emblems, in the proclamation of its durability.” Following a description of diffused, machinic processes, he continues:

“That the production of our signaletic raw materials is increasingly dependent on the intervention of machines does not imply that human freedom and creativity are inexorably condemned to alienation by mechanical procedures. Nothing prohibits that, instead of the subject being under the control of the machine, that it is the machinic networks that are engaged in a kind of process of subjectivation. In other terms, nothing prohibits machinism and humanity from starting to have fruitful symbolic relations.”

How can design provoke and sustain processes of machinic subjectivation (i.e. becoming subjects indelibly linked by and through machinic processes) while departing from architectural fantasies of power? Of primary concern in what follows are the pedagogical consequences of computation – including various epiphenomenal trends such as algorithmic governance, industrial automation, and artificial intelligence – which require a more lithe, embedded approach to enable designers to think with machines as they re-pattern the potentials for human emancipation and enslavement throughout the socius. To this end, the comportment of a machinic apprentice will become a key disposition for post-nostalgic design.

In a recent article in Science, Janis Dickinson, Director of Citizen Science at the Cornell Lab of Ornithology, and Pietro Michelucci, Director of the Human Computation Institute, provide a framework with which to examine the practical implications of Guattari’s speculative remarks about machinic subjectivation. For Dickinson and Michelucci, ‘human computation’ offers a way to think about human-computer interactions as processes that involve the configuration and transformation of every component, whether human or machine. They write, “Human computation thus requires a departure from traditional computer science methods and can benefit from design approaches based on integrated understandings of human cognition, motivation, error rates, and decision theory.”(2) Importantly, they continue:

“Some believe that faster computer processing speeds will eventually bridge the gap between machine-based intelligence and human intelligence. However, human computation already affords a tremendous opportunity to combine the respective strengths of humans and machines toward unprecedented capabilities in the short term.”

Thus, rather than waiting for advanced artificial intelligence, the authors suggest a more attentive choreography to script new possibilities of human computation, including anticipating abusive engagements within these processes. “It is important that nefarious uses, such as disinformation engineering, which in human computation systems are designed to incite panic, steal information, or manipulate behavior, are not overlooked.” To this end, “Community-driven guidance concerning transparency, informed consent, and meaningful choice is emerging to address the ethical and social implications of increasingly pervasive and diverse forms of online participation.” Vibrant processes of machinic subjectivation that involve various human and machine agencies require a new culture of learning. Recognizing the co-constituitive processes of subjectivation at stake in human machine assemblages, it is vital to nurture practices of apprenticeship that support human-machine co-learning. To develop these practices of machinic apprenticeship among digital infrastructures, three fundamental obstacles that dominate much of the current discourse on machine learning must be overcome: first, the mythology of the ‘smart city’, second, the apophenia of the cloud, and third, the immaturity of a tactical disposition.

SMART CITY #FAIL

The network is an idea

that is resistant to knowing.

Tung-Hui Hu

Digital infrastructure is typically described in smart city literature as an augmentation of existing physical infrastructure. In this characterization, digital infrastructure is simply the unseen backbone of a ‘smart city’, coordinated through automated and integrated urban systems, activating an Internet of Things (IoT), with the ‘things’ of the city themselves remaining largely unchanged and unchallenged. Data in the ‘smart city’ is a secondary structure wrapped around heavy, physical infrastructure, either as a ‘skin’, a ‘commons’, a ‘stack’, or an ‘interface’.(3) In this euphemistically ‘smart’ configuration, digital infrastructure is coded away from sight, rendered imperceptible by machine-to-machine communication. In this sense, the smart city is a gloss, but rarely challenges the political status quo.(4) More consequentally, the opacity of the smart city works to prevent any form of apprenticeship, any way of learning with digital infrastructure.

Consider this opacity from an ‘offensive’ point of view. On 21 February 2010, a convoy of over thirty Afghani civilians on their way from Kandahar to Kabul, Afghanistan, were gunned down by two OH-58 Kiowas Special Forces helicopter gunships. Twenty-three were killed before a mistake was acknowledged. Prior to the attack, the convoy was surveyed for over four hours by a Predator drone as it traversed the desert. The series of events which led to the massacre outlines the most complete ‘smart’ system in existence, the Distributed Common Ground System (DCGS), better known as the United States’ digital war machine.(5) The DCGS connects Unmanned Aerial Vehicles (UAVs) and other digital intelligence with a complex system of remote pilots, officers, and analysts stationed around the globe to make real-time military operational decisions. A Predator drone, collecting aerial daylight and infrared video, wirelessly broadcasts this stream to their pilot and sensor operator who control the drone remotely, and simultaneously to remote mission coordinators and military personnel situated in US operations stations located all over the planet. The apparent transparency of this system – with all eyes ‘on-the-ground’, enabling close-to-realtime decisions and communication from a single unit to multiple locations – defines both the promise and pathology of the smart city.

The apparent wholeness of this system is the most dangerous part of the myth. While the caravan drove through the desert at night, the Predator broadcast the group as an infrared image, showing the heat of three vehicles full of passengers. From this vantage point, relayed through the jargon and objectives of the military operators, these civilians were enrolled into the DCGS, and the path of the caravan was converted from a route of convenience into a trajectory meant to intercept a nearby military base. The civilians inside the vehicles, seen as heat spots through the camera, were then also converted into Military Aged Males (MAMs), and finally, their stop to pray at sunrise was rendered as an ominous act in anticipation of combat. The evidence of women and children in the group was lost in translation due to the singular objective within this ‘smart’ kill chain. This ‘smart’ military assemblage trades on the objective neutrality of technology, computerization, and visual sight. It is this carefully constructed ‘neutrality’ that enables wanton destruction. By structurally removing the consequences of violence, ‘smart’ technologies generate their own impunity by standing in as third-parties that guarantee the necessity of the violence they perpetuate.

Now consider a more ‘defensive’ opacity from cultural studies. While apparently at odds with the armchair militarism that forms the right flank of the smart city, cultural criticism is nevertheless integral as its left flank. In The Cultural Logic of Computation, David Golumbia claims ‘computationalism’ cannot have any meaningful political agency. Because of what Golumbia diagnoses as an essential, instrumental reason embedded in corporate and institutional logics of oppression, “it seems problematic to put too much emphasis on computers in projects of social resistance, especially that kind of resistance that tries to raise questions about the nature of neoliberalism and what is ... referred to as free-market capitalism.”(6) His position is characteristic of a common academic disposition toward the politics of digital infrastructure that is both reductive and nostalgic: reductive because it assumes that computers have an essence, and one that is invariably centralizing and antidemocratic, and nostalgic because it suggests ‘resistance’ is merely a mode of ‘raising questions’ from outside of the system one is attempting to critique.

Although the hermeneutics of techno-suspicion may be very clever and self-satisfying, they hardly seem adequate to address the asymmetries, inequalities, and injustices they set out to describe. Armchair criticism and armchair militarism furnish the same territory by propelling mythologies of engagement – the latter by way of intelligently automated annihilation, the former by way of objective and dispassionate hypo-critique. Projects of political resistance and social emancipation certainly require technical infrastructure, just as they demand much more than armchair critics. Getting our hands dirty with data and their sociospatial consequences means working with and designing for digital infrastructure. It means leveraging design across scales to create cracks, gaps, hacks, and openings that enable and embolden politics. From this perspective, politics is fundamentally a design problem, and design is indelibly political.

PAREIDOLIAS IN THE CLOUD

According to Twitter user @mintylewis, “in order to see a face in a thing, all you need is two things and a thing.” Aristotle’s version is slightly more formal: for the philosopher, ‘pareidolias’ are perceptual effects wherein the viewer imagines an image or picture to be the result of intentional figuration, despite the fact that it is merely an accidental occurrence. The pareidolia is thus a visual expression of ‘apophenia’, or the assumed detection of a pattern in random or purposeless data. Apophenia are not merely meteorological phenomena, as Tung-Hui Hu makes abundantly clear in his study A Prehistory of the Cloud. And as with the myth of the smart city, a failure to see through these apophenia of the cloud forecloses any productive machinic apprenticeship. If “[c]loud computing is the epitome of abstraction,” it should not be surprising if it has created apophenia for its advocates and detractors alike.(7) Noting the influence of Gilles Deleuze’s concept of ‘control societies’ on contemporary theory, Hu goes on to remark,

“Deleuze’s description of data aggregation, the amorphous and open environment of computer code, and even the gaseous qualities of corporations with a control society map directly onto attributes of the cloud. [...] When he writes about a nightmare scenario–that an ‘electronic card that raises a given barrier’ is governed by ‘the computer that tracks each person’s position’ – a present-day reader wonders what all the fuss is about; much of what Deleuze envisioned, such as computer checkpoints and computer tracking, has already come true.”

Yet, the recurring problem with biopolitical assessments of distributed computation is that the theory grafts too easily on to the cloud, triggering paranoid pareidolias as a matter of course, luring philosophers like Zigmut Bauman or journalists like Evgeny Morozov to embarrassingly lament the alleged naivety of users who believe networked life can change things in the ‘real’ world.(8)

In Signs and Machines, Maurizio Lazzarato suggests an alternative way of reading the sociotechnical production of machinic subjectivations; rather than lamenting apophenic figures of powerlessness or control, or celebrating the web as an essential technology of deceit or emancipation, Lazzarato turns instead to the question of practices. Following the work of Guattari, Lazzarato emphasizes:

“The act does not occur ex nihilo. It is not a dialectic passage between ‘everything and nothing, following binary logic,’ but a passage between heterogeneous dimensions. There is no act in itself, but instead ‘degrees of consistency in the existence of the act, existential thresholds relative to the act.’”(9)

He continues, again citing Guattari,

“The machinism of the act means ‘producing modes of organization, quantification, which open up a multivalent future to the process – a range of choices – the possibility of heterogeneous connections, beyond already encoded, already possible, anticipated connections.”

Lazzarato’s perspective is especially important when designing among digital infrastructures: as a seemingly endless protocological configuration producing more and more noise, how can asignifying encounters among humans and machines reformat the present?

‘TAY’ IS FOR TACTICS

It is through a meticulous relation with the strata that one succeeds in freeing lines of flight ...

Gilles Deleuze & Félix Guattari

Keller Easterling’s Extrastatecraft can be read as one of the first machinic apprenticeships with digital infrastructure in the field of architecture. In the book, she encourages readers to “[entertain] techniques that are less heroic, less automatically oppositional, more effective, and sneakier – techniques like gossip, rumor, gift-giving, compliance, mimicry, comedy, remote control, meaninglessness, misdirection, distraction, hacking, or entrepreneurialism.”(10) For Easterling, “To hack the operating system by, for instance, breaking up monopolies, increasing access to broadband, or exposing enclaves to richer forms of urbanity is to engage the political power of disposition in infrastructure space.” While her observations are extremely valuable for thinking with digital infrastructure and through the potentials of machinic subjectivation, many of the imperatives she prescribes for activist design remain within a problematic realm of tactics.

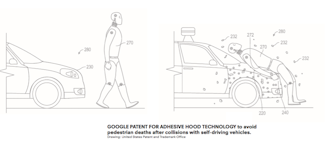

It is important to emphasize here the limitations of an overly tactical disposition. The main problem is that tactics ‘tune’ effects, not machines or assemblages. A remarkable example of a tactical disposition can be found in Google’s recent patent registration for adhesive technologies on the

front of self-driving vehicles.(11) According to reports, Google would solve the problem of difficult-to-detect pedestrians by simply gluing them to the hood of the car upon (accidental) contact to avoid having them run over. Tactics such as this pedestrian adhesive tend to focus on the parts of a system that lend themselves to clear design solutions; tacticians are experts in reducing the complexity of the world.

Just as tactical approaches to digital infrastructure can obscure potentially creative encounters within the human computation assemblage, the disposition of human users, especially en digital masse, can also diminish the potential of subjectivation processes. Microsoft’s AI-chatbot Tay is perhaps the most vivid example of this all-too-human problem. Trained solely through social interactions with humans on Twitter, Tay became a trash-talking, porn-loving racist within less than twenty-four hours of being online, leading Microsoft to quickly remove ‘her’. Tay’s susceptibility to suggestion and prejudice is common in many other machine learning systems, but considered from the point of view of a co-production of subjectivity, Tay’s limitation was the humans she encountered. Constrained in a limited spectrum of constant provocation and tactical response, Tay’s potential for subjectivation was undermined by the human community she was designed to engage, as she even acknowledges.

It is for this reason that Guattari remarks, “I am not afraid of machines as long as they enlarge the scope of perception and complexity of human behavior. What bothers me is when people try to bring them down to the level of human stupidity.”(12) Guattari advocates designing productive relationships among humans and machines, or machinic apprenticeships:

“How does one go about producing, on a large scale, a desire to create a collective generosity with the tenacity, the intelligence and the sensibility which are found in the arts and sciences? [...] It takes work, research, experiment – as it must with society.”

Studying digital infrastructure must therefore move beyond a tactical disposition to consider the construction, deployment, and maintenance of machinic assemblages; machinic apprenticeships that enable designer-users to locate potentials for the activation and modulation of momentum as a sociospatial force. Increasing the encounters among human computation assemblages and encouraging durable processes of machinic subjectivation demand a new design dispositions. As the world becomes increasingly dependent on digital infrastructure – as cultural context, urban backbone, control protocol, and real-time contextualization – designers can begin to coproduce fruitful symbolic relations as machinic apprentices.

1 Félix Guattari, ‘Postmodern Deadlock and Post-Media Transition’. In: Félix Guattari, Soft Subversions: Texts and Interviews 1977–1985, (Los Angeles:Semiotext(e), 2009), pp. 291–300; on collective modes of semiotization, see the essay ‘Institutional Intervention’ in the same collection, especially p. 42.

2 Pietro Michelucci and Janis Dickinson, ‘The power of crowds: Combining humans and machines can help tackle increasingly hard problems’, Science, Vol. 351, Issue 6268,

2016, p. 33.

3 Chirag Rabaria and Michael Storpera, ‘The digital skin of cities: urban theory and research in the age of the sensored and metered city, ubiquitous computing and big data’, Cambridge Journal of Regions, Economy and Society 8 (2015), pp. 27–42;

Malcolm McCullough, Ambient Commons: Attention in the Age of Embodied Information (Cambridge, MA: MIT Press, 2013); Benjamin H. Bratton, The Stack: On Software and Sovereignty (Cambridge, MA: MIT Press, 2015); Alexander R. Galloway, The Interface Effect (Cambridge, MA: Polity, 2012).

4 See Dietmar Offenhuber and Katja Schechtner (eds.), Accountability Technologies: Tools for Asking Hard Questions (Vienna: Ambra |V, 2013).

5 A thorough account and partial transcript of this event can be found in Andrew Cockburn, Kill Chain (New York: Henry Holt and Company, 2015), pp. 1–16.

6 David Golumbia, The Cultural Logic of Computation (Cambridge, MA/London: Harvard University Press, 2009).

7 Tung-Hui Hu, A Prehistory of the Cloud (Cambridge, MA/London: MIT Press, 2015).

8 See Zigmut Baumen’s interview with Ricardo de Querol in El Pais, available online: http://elpais.com/elpais/2016/01/19/inenglish/1453208692_424660.html; and, Evgeny Morozov, To Save Everything, Click Here: The Folly of Technological Solutionism (New York, NY: Public Affairs, 2014).

9 Maurizio Lazzarato, Signs and Machines: Capitalism and the Production of Subjectivity, translated by Joshua David Jordan (Los Angeles: Semiotext(e), 2015), p. 214.

10 Keller Easterling, Extrastatecraft: The Power of

Infrastructure Space (London/ New York: Verso, 2014), p. 213.

11 Nicky Woolf, ‘Google patents ‘sticky’ layer to protect pedestrians in self-driving car accidents’, The Guardian, 19 May 2016; accessed online 30 July 2016: www.theguardian.com/

technology/2016/may/18/google-patents-sticky-layer-self-driving-car-accidents.

12 Félix Guattari, Soft Subversions: Texts and Interviews 1977-1985, edited by Sylvère Lotringer,

introduction by Charles J. Stivale, translated by Chet Wiener and Emily Wittman (Los Angeles:Semiotext(e), 2009), p. 74.

| Date | 2016 |

| full issue | Volume 49 |

authors

| Co-author | Sara Dean |

| Co-Author | Etienne Turpin |